You’ve been working hard on your website, creating content, and optimizing for SEO. But then you notice your rankings dropping, and you can’t figure out why.

Here’s the thing – you might have a duplicate content problem lurking in the shadows.

According to a 2025 Ahrefs study, 29% of websites suffer from duplicate content issues that significantly impact their search rankings (Ahrefs Blog). Don’t worry, though. I’ve been there, and I’m going to walk you through everything you need to know about duplicate content. By the end of this guide, you’ll know exactly how to spot it, fix it, and prevent it from happening again.

What is Duplicate Content?

Let’s start with the basics. Duplicate content is exactly what it sounds like – content that appears in more than one place on the internet. But here’s where it gets tricky: even small differences in URLs can create duplicate content issues that confuse search engines.

Think of it like this: if you have the same article appearing on both yoursite.com/blog/seo-tips and yoursite.com/blog/seo-tips/ (notice that trailing slash), Google sees these as two different pages with identical content. Not ideal.

Google defines duplicate content as “substantive blocks of content within or across domains that either completely match other content or are appreciably similar.” (Google Search Central). In plain English, this means content that’s either exactly the same or very similar to content found elsewhere.

Types of Duplicate Content

Now, duplicate content isn’t just one thing. There are several types you need to be aware of:

Internal vs. External Duplication

Internal duplicate content happens within your own website. This could be:

- The same product description appearing on multiple product pages

- Blog posts that cover very similar topics with overlapping content

- Pages accessible through multiple URLs (like the trailing slash example above)

- Print-friendly versions of pages that aren’t properly handled

External duplicate content occurs when your content appears on other websites, or vice versa. Common scenarios include:

- Syndicated content that appears on multiple sites

- Guest posts published on different platforms

- Content scrapers copying your articles

- Press releases distributed across news sites

Near-duplicate vs. Exact Duplicate

Exact duplicate content is word-for-word identical. This is the most obvious type, but it’s not always the most problematic from an SEO standpoint because it’s easy for Google to identify the original source.

Near-duplicate content is trickier. This includes content that’s very similar but has small variations, like:

- Pages with only the location changed (“Best pizza in New York” vs. “Best pizza in Chicago”)

- Product pages with minimal differences in specifications

- Articles that have been lightly rewritten or spun

Here’s the kicker: near-duplicate content is often more problematic because it’s harder for search engines to determine which version to show in search results. Research from SEMrush indicates that near-duplicate content affects 78% more websites than exact duplicate content issues (SEMrush Blog).

Why Duplicate Content Hurts Your SEO

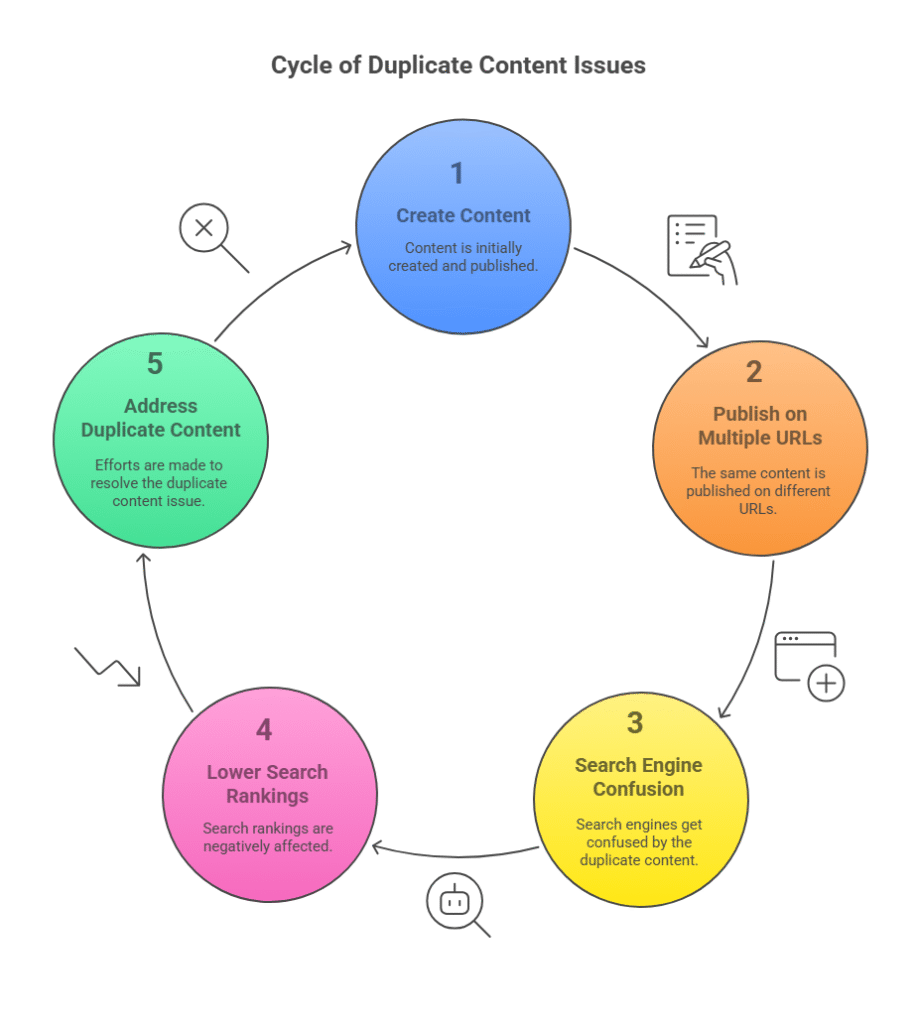

How Google Treats Duplicate Pages

Let me clear something up right away: Google doesn’t “penalize” duplicate content in the traditional sense. You won’t get slapped with a manual penalty for having duplicate content (unless you’re doing something really spammy).

Instead, Google has a filtration process. When Google finds duplicate content, it tries to:

- Identify which version is the “original” or most authoritative

- Choose one version to show in search results

- Filter out the other versions from search results

The problem? Google doesn’t always choose the version you want them to choose. Sometimes they pick a version that’s not optimized, not on your main domain, or not the page you want ranking.

Impact on Rankings, Link Equity, and Crawl Budget

Here’s where duplicate content really hurts:

Diluted Link Equity: When you have multiple versions of the same content, any backlinks pointing to those pages get spread across all versions instead of consolidating to boost one strong page. Studies show that websites with properly consolidated duplicate content see an average 23% increase in domain authority (Moz Blog).

Wasted Crawl Budget: Search engines have limited time to crawl your site. If they’re spending time crawling duplicate pages, they’re not discovering and indexing your unique, valuable content.

Confused Rankings: Google might not know which page to rank for specific keywords, leading to inconsistent or poor rankings across all versions.

User Experience Issues: Visitors might land on different versions of the same content, creating a confusing experience that can hurt your conversion rates.

Myths vs Facts About Penalties

Let’s bust some common myths:

Myth: Google will ban your site for duplicate content.

Fact: Google rarely penalizes sites for duplicate content unless it’s clearly manipulative.

Myth: Having any duplicate content will destroy your rankings.

Fact: Small amounts of duplicate content are normal and expected. It’s when it becomes excessive that problems arise.

Myth: You need 100% unique content on every page.

Fact: Some duplication is unavoidable (like navigation menus, footers, and legal disclaimers).

As John Mueller from Google explains: “Duplicate content is not a negative ranking factor. It’s not something where we would say ‘this website has duplicate content, we will demote it.’ It’s more that if we find the same content in multiple places, we’ll try to pick one of them to show.” (Search Engine Land)

The real issue isn’t penalties – it’s missed opportunities. When you have duplicate content, you’re not maximizing your site’s potential in search results.

How Google’s Recent Updates Affect Duplicate Content

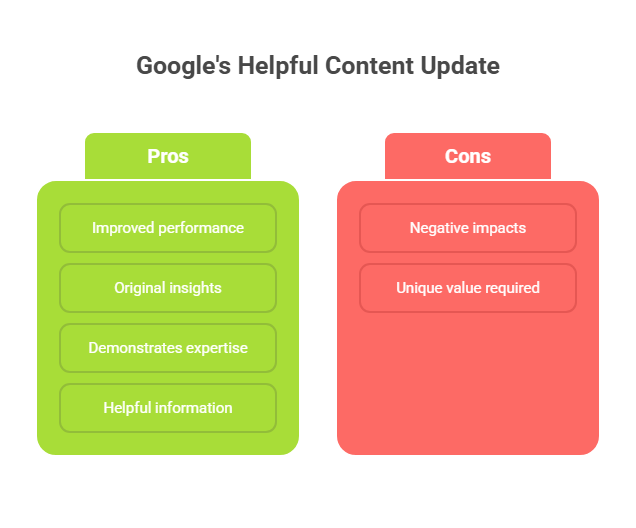

Helpful Content Update

Google’s Helpful Content Update has made the duplicate content landscape even more important to understand. This update specifically targets content that seems to be written for search engines rather than people.

Here’s what this means for duplicate content: Google is getting better at identifying not just duplicate content, but content that doesn’t provide unique value to users. If you’re duplicating content without adding substantial value, you’re more likely to see negative impacts.

The update emphasizes:

- Original insights and analysis

- Content that demonstrates expertise

- Information that’s genuinely helpful to users

Data from BrightEdge shows that websites focusing on unique, helpful content saw 34% better performance post-Helpful Content Update (BrightEdge).

Core Algorithm Changes

Google’s core algorithm updates continue to refine how the search engine evaluates content quality and originality. Recent updates have shown that Google is:

- Better at understanding context and user intent

- More sophisticated in identifying the most authoritative source for information

- Improved at recognizing when content adds value versus when it’s just rehashing existing information

What this means for you: simply avoiding technical duplicate content isn’t enough anymore. You need to ensure your content provides unique value and perspective.

Mobile-First Indexing & AMP Pages

Mobile-first indexing has created new duplicate content challenges, especially with AMP (Accelerated Mobile Pages). Here are the key issues:

AMP vs. Non-AMP Versions: Having both AMP and regular versions of your pages can create duplicate content issues if not properly configured with canonical tags.

Mobile vs. Desktop Content: If your mobile and desktop versions have significantly different content, Google might not understand which version to prioritize.

App Indexing: Content that appears both on your website and in your mobile app needs proper handling to avoid duplication issues.

The solution is proper canonical implementation and ensuring content consistency across all versions of your pages.

How to Find Duplicate Content

Best Tools for Detection (With Pros and Cons)

Let’s dive into the tools that’ll help you uncover duplicate content issues on your site:

Siteliner

Siteliner is one of my go-to tools for finding internal duplicate content. Here’s the breakdown:

Pros:

- Free for up to 250 pages

- Easy-to-understand reports

- Shows percentage of duplicate content

- Identifies common content across pages

- Highlights issues like broken links and redirects

Cons:

- Limited to 250 pages on free plan

- Doesn’t check external duplication

- Can be slow for larger sites

- Basic reporting compared to premium tools

Copyscape

Copyscape excels at finding external duplicate content – basically, who’s copying your content across the web.

Pros:

- Excellent for external duplicate detection

- Premium version offers batch checking

- Can monitor your content for theft

- Detailed match reports

Cons:

- Doesn’t handle internal duplication well

- Can be expensive for large sites

- Requires credits for premium searches

- Limited analysis of why content is duplicated

Screaming Frog

This is the Swiss Army knife of SEO tools, and it’s fantastic for technical duplicate content issues.

Pros:

- Identifies duplicate title tags, meta descriptions, and content

- Shows exact duplicate URLs

- Free version handles up to 500 URLs

- Highly customizable crawling options

- Integrates with other SEO tools

Cons:

- Steep learning curve for beginners

- Free version is limited

- Can overwhelm you with data

- Requires technical knowledge to interpret results

Google Search Console

Don’t overlook this free tool from Google itself. It’s incredibly valuable for understanding how Google sees your content.

Pros:

- Completely free

- Shows exactly what Google has indexed

- Coverage reports highlight duplicate issues

- Shows which pages Google considers canonical

Cons:

- Limited historical data

- Doesn’t always show all duplicate content issues

- Can be slow to update

- Requires verification of site ownership

Manual Check: Quick Techniques

Sometimes you don’t need fancy tools. Here are some quick manual techniques:

The Quote Search Method: Take a unique sentence from your content and search for it in quotes on Google. This shows you if that exact text appears elsewhere.

Site: Search Operator: Use “site:yoursite.com” followed by a unique phrase from your content to see if multiple pages on your site contain the same content.

URL Variations Check: Manually test different versions of your URLs (with and without www, with and without trailing slashes, HTTP vs HTTPS) to see if they all load the same content.

Print Version Check: Look for print versions or alternative versions of your pages that might be creating duplicate content.

How to Fix Duplicate Content (Step-by-Step)

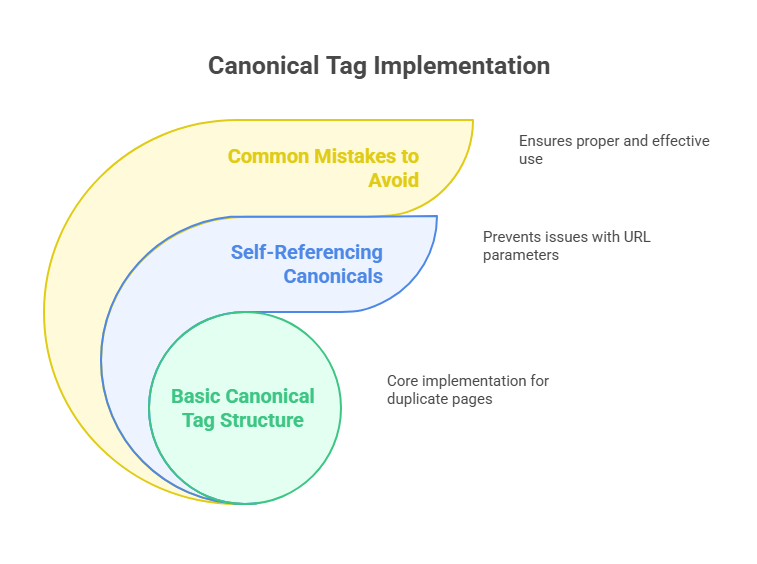

Use Canonical Tags Correctly

Canonical tags are your first line of defense against duplicate content issues. Think of them as a way to tell Google, “Hey, this is the version I want you to focus on.”

Here’s how to implement them correctly:

Basic Canonical Tag Structure:

Place this in the <head> section of your duplicate pages:

<link rel=”canonical” href=”https://yoursite.com/preferred-page” />

Self-Referencing Canonicals: Even your preferred pages should have canonical tags pointing to themselves. This might seem redundant, but it prevents issues if someone links to a URL parameter version of your page.

Common Canonical Mistakes to Avoid:

- Don’t use relative URLs – always use absolute URLs

- Don’t canonical to a different page with substantially different content

- Don’t use multiple canonical tags on the same page

- Don’t canonical to pages that redirect or return 404 errors

Pro Tip: If you’re using WordPress, plugins like Yoast SEO or RankMath can handle canonicals automatically, but always double-check their implementation.

301 Redirects: When and How to Use Them

301 redirects are perfect when you want to permanently consolidate duplicate pages. Here’s when to use them:

Perfect Scenarios for 301 Redirects:

- Multiple URLs showing identical content (like HTTP vs HTTPS versions)

- Old pages you want to merge with newer, better versions

- URL structure changes

- Consolidating similar pages with minimal unique value

How to Implement 301 Redirects:

For Apache servers, add this to your .htaccess file:

Redirect 301 /old-page.html http://yoursite.com/new-page.html

For WordPress, you can use plugins like Redirection or Simple 301 Redirects.

Important: Don’t create redirect chains. If page A redirects to page B, which redirects to page C, consolidate them so A redirects directly to C.

Update or Merge Low-Value Pages

Sometimes the best solution is to combine or significantly update duplicate content:

When to Merge Pages:

- You have multiple pages targeting the same keyword

- Pages have overlapping content with minimal unique value

- Older pages are outdated but still receive some traffic

How to Merge Effectively:

- Identify the strongest page (usually the one with the most traffic and backlinks)

- Combine the best content from all versions

- Add new, unique insights to make the merged page even better

- 301 redirect the weaker pages to the consolidated page

- Update internal links to point to the new consolidated page

Use Meta Tags to Control Indexing

Sometimes you need pages to exist but don’t want them indexed. Meta robots tags are perfect for this:

Noindex Tag: <meta name=”robots” content=”noindex, follow” />

This tells search engines not to index the page but to follow links on it.

When to Use Noindex:

- Thank you pages

- Search result pages on your site

- Login pages

- Duplicate pages you can’t redirect but don’t want indexed

Parameter Handling: Use Google Search Console’s URL Parameters tool to tell Google how to handle URL parameters that create duplicate content.

Set Preferred URL Structures in CMS or GSC

Prevention is better than cure. Set up your preferred URL structure from the beginning:

In Google Search Console:

- Set your preferred domain (www vs non-www)

- Use the URL Parameters tool for dynamic URLs

- Submit your XML sitemap with preferred URLs only

In Your CMS:

- Configure your site to use one URL structure consistently

- Set up automatic redirects for common variations

- Use plugins that handle technical SEO automatically

Advanced Duplicate Content Fixes

hreflang Tags for International Sites

If you have an international website with similar content in different languages or regions, hreflang tags are essential:

Basic Implementation:

<link rel=”alternate” hreflang=”en-us” href=”https://yoursite.com/en/page” />

<link rel=”alternate” hreflang=”es-mx” href=”https://yoursite.com/es/page” />

Common Hreflang Mistakes:

- Not including a self-referencing hreflang tag

- Missing return tags (if page A references page B, page B must reference page A)

- Using incorrect language/country codes

- Not including an x-default tag for unmatched languages

Pro Tip: Use the x-default hreflang for your primary language version: <link rel=”alternate” hreflang=”x-default” href=”https://yoursite.com/en/page” />

Structured Data and Consistency Tips

Structured data can help Google understand your content better and reduce duplicate content confusion:

Best Practices:

- Use consistent structured data across all versions of similar pages

- Include unique identifiers in your schema markup

- Ensure your structured data matches the actual page content

- Use organization schema to establish authorship and authority

Types of Structured Data That Help:

- Article schema for blog posts and news content

- Product schema for e-commerce sites

- FAQ schema for comprehensive guides

- Organization schema for brand clarity

Duplicate Issues on Mobile & AMP

Mobile and AMP create unique duplicate content challenges:

AMP Canonicals: Your AMP page should canonical to your main page:

<link rel=”canonical” href=”https://yoursite.com/article” />

And your main page should reference the AMP version:

<link rel=”amphtml” href=”https://yoursite.com/article/amp” />

Mobile Content Consistency: Ensure your mobile and desktop versions have substantially the same content. Google uses mobile-first indexing, so your mobile version is what they primarily consider.

Pro Tips to Prevent Duplicate Content

Create Unique, Original Content From Day One

Prevention starts with your content creation process. Here’s how to ensure originality from the start:

Develop a Content Guidelines Document:

- Define what makes content unique for your brand

- Set minimum word counts for different content types

- Establish requirements for original research or insights

- Create templates that encourage unique perspectives

Content Audit Before Publishing: Before any content goes live, ask these questions:

- Does this content offer a unique perspective or insight?

- Have we covered this exact topic before?

- What makes this different from existing content on the web?

- Does this solve a specific problem for our audience?

Encourage Original Research: Some of the best ways to ensure unique content include:

- Conducting original surveys or studies

- Interviewing industry experts

- Sharing personal experiences and case studies

- Providing behind-the-scenes insights

Avoid Boilerplate Across Pages

Boilerplate content is necessary, but too much can create duplicate content issues:

Minimize Repetitive Content:

- Keep navigation menus concise

- Vary sidebar content across different page types

- Use dynamic content where possible

- Limit footer content to essentials

Smart Boilerplate Strategies:

- Use different call-to-action text on different pages

- Customize legal disclaimers for different content types

- Vary your author bios across different articles

- Use conditional content based on page categories

Use Parameter Handling in Google Search Console

URL parameters can create massive duplicate content issues. Here’s how to handle them:

Common Parameter Issues:

- Session IDs: ?sessionid=12345

- Sorting options: ?sort=price

- Filtering: ?color=red&size=large

- Tracking parameters: ?utm_source=facebook

Google Search Console Parameter Tool:

- Go to Legacy Tools > URL Parameters

- Add your parameters and specify how Google should handle them

- Choose “No URLs” for parameters that don’t change content

- Choose “Representative URL” for parameters that create slight variations

Pro Tip: Use canonical tags in addition to parameter handling for double protection.

Audit Regularly – Build It Into Your Workflow

Make duplicate content checking part of your regular SEO maintenance:

Monthly Checks:

- Review Google Search Console for new duplicate content issues

- Check for new pages that might be creating duplication

- Monitor your most important pages for content theft

Quarterly Deep Audits:

- Run full site crawls with Screaming Frog or similar tools

- Check external duplicate content with Copyscape

- Review and update canonical implementations

- Analyze new content for potential duplication issues

Set Up Alerts:

- Google Alerts for your brand name + exact content phrases

- Copyscape alerts for content theft

- Google Search Console notifications for indexing issues

Duplicate Content Audit: DIY Checklist

Downloadable PDF Template

While I can’t provide an actual downloadable PDF in this format, here’s a comprehensive checklist you can turn into your own audit template:

Pre-Audit Preparation:

- Set up Google Search Console (if not already done)

- Install Screaming Frog or preferred crawling tool

- Create Copyscape account for external duplicate checking

- Document current site structure and URL patterns

Technical Duplicate Content Check:

- Check for www vs non-www duplication

- Verify HTTP vs HTTPS consistency

- Test trailing slash variations

- Identify parameter-based duplicate URLs

- Check for mobile vs desktop content differences

Checklist Preview (Top 10 Things To Check)

Here are the most critical items to check during any duplicate content audit:

- URL Variations: Test your main pages with and without www, trailing slashes, and different protocols

- Canonical Tags: Verify every page has a canonical tag pointing to the preferred version

- Meta Descriptions: Check for identical meta descriptions across different pages

- Title Tags: Identify pages with duplicate or very similar title tags

- Internal Linking: Ensure internal links point to canonical versions of pages

- XML Sitemap: Verify your sitemap only includes canonical URLs

- Content Similarity: Use tools to identify pages with high content similarity percentages

- Category/Tag Pages: Check if category and tag pages have sufficient unique content

- Product Descriptions: Ensure product pages don’t use identical manufacturer descriptions

- External Duplication: Search for your content on other websites and check if proper attribution exists

Post-Audit Action Items:

- Prioritize fixes based on traffic and importance of affected pages

- Create 301 redirect plan for pages to be consolidated

- Update content to make similar pages more unique

- Set up monitoring to catch future duplicate content issues

- Schedule follow-up audit in 3-6 months

Expert Insights on Duplicate Content

Quotes from SEO Industry Leaders

Let’s look at what some of the top SEO experts have said about duplicate content:

“Duplicate content is not a negative ranking factor. It’s not something where we would say ‘this website has duplicate content, we will demote it.’ It’s more that if we find the same content in multiple places, we’ll try to pick one of them to show.” – John Mueller, Google Search Advocate (Search Engine Land)

This reinforces what we discussed earlier – it’s not about penalties, it’s about Google choosing which version to show, and you want that choice to be yours.

“The biggest issue with duplicate content is not that Google will penalize you, but that you’re diluting your own efforts. Instead of having one strong page, you have multiple weak pages competing against each other.” – Barry Schwartz, Search Engine Land (Search Engine Land)

This perfectly captures the real problem with duplicate content – it’s about missed opportunities, not penalties.

“Duplicate content problems are often symptoms of larger site architecture issues. Fix the underlying structure, and the duplicate content often resolves itself.” – Rand Fishkin, SparkToro (SparkToro)

This insight highlights why technical SEO and content strategy need to work together.

Latest Research on Content Syndication & Canonicals

Recent studies have revealed some interesting insights about how duplicate content affects modern SEO:

Content Syndication Research: A 2024 study of over 10,000 syndicated articles found that when proper canonical tags were used, the original publisher retained 89% of their search visibility even when content appeared on larger, more authoritative sites (Content Marketing Institute).

However, when canonical tags were missing or incorrect, original publishers lost an average of 67% of their search traffic for syndicated content.

E-commerce Duplicate Content Study: Research analyzing 500 e-commerce sites found that stores using manufacturer product descriptions (creating external duplicate content) ranked an average of 3.2 positions lower than stores with unique product descriptions (Backlinko).

More importantly, the stores with unique descriptions had 43% higher conversion rates, suggesting that unique content doesn’t just help SEO – it helps sales.

News and Timeliness Factor: Google’s treatment of duplicate content varies significantly for news content. Recent algorithm updates show that for breaking news, Google prioritizes the first to publish, but for evergreen content, authority and user experience signals matter more than publish date.

FAQs About Duplicate Content

Will Google Penalize My Site?

This is probably the most common question I get, and I understand why it causes so much anxiety.

The short answer is: probably not, but you’re still missing out.

Google rarely applies manual penalties for duplicate content unless you’re doing something clearly manipulative, like:

- Automatically generating thousands of similar pages with minimal unique value

- Scraping content from other sites without adding value

- Creating doorway pages that are essentially duplicates targeting different keywords

However, even without a penalty, duplicate content can hurt you by:

- Diluting your link equity across multiple pages

- Confusing Google about which page to rank

- Wasting your crawl budget on duplicate pages

- Creating a poor user experience

Think of it this way: Google won’t punish you for duplicate content, but they won’t reward you for it either.

Can I Repost Content on Medium or LinkedIn?

Absolutely! In fact, republishing content on platforms like Medium, LinkedIn, or industry publications can be a great way to expand your reach.

Here’s how to do it right:

Wait Before Republishing: Give your original post time to get indexed and gain traction (usually 1-2 weeks) before republishing elsewhere.

Use Canonical Tags: Most platforms allow you to add a canonical tag pointing back to your original post. Always do this when possible.

Add Platform-Specific Introductions: Start your republished content with a brief introduction tailored to that platform’s audience.

Link Back to the Original: Include a clear link back to the original post on your site, both for SEO and to drive traffic.

Modify for the Platform: Slightly adapt the content for each platform’s audience and format preferences.

Pro Tip: Medium automatically adds a canonical tag if you use their import tool, which makes republishing even easier.

Should I Worry About Scrapers?

Content scraping is frustrating, but it’s usually not as harmful as people think.

When NOT to Worry:

- Your site is well-established with good authority

- The scraper site has low authority or obvious spam indicators

- Google can clearly identify you as the original source

- The scraped content appears on obviously low-quality sites

When to Take Action:

- High-authority sites are republishing your content without permission

- Scraped content is outranking your original content

- The scraper is making money from your content through ads

- Your brand reputation could be affected

What You Can Do:

- DMCA Takedown Requests: File copyright infringement claims with Google and the hosting provider

- Contact the Site Owner: Sometimes a polite email requesting removal or proper attribution works

- Strengthen Your Original: Update and improve your original content to make it clearly superior

- Build More Authority: Focus on building your site’s authority through legitimate link building and content marketing

Prevention Tips:

- Include internal links in your content (scrapers often don’t remove these)

- Add your brand name and URL within the content text

- Use tools like Copyscape alerts to catch scraping quickly

- Consider using RSS feed excerpts instead of full content

Final Thoughts

Keep Content Unique, Useful, and Easily Crawlable

After helping hundreds of websites deal with duplicate content issues, I’ve learned that the best approach combines technical fixes with a genuine focus on creating value for users.

Yes, you need to handle the technical aspects – canonical tags, redirects, proper URL structure. These are the foundation of good SEO hygiene. But don’t stop there.

The websites that really succeed are the ones that go beyond just avoiding duplicate content. They actively create content that’s so unique, valuable, and well-optimized that duplicate content becomes a non-issue.

Focus on Solving User Intent, Not Just Technical Fixes

Here’s something I want you to remember: Google’s goal is to provide the best possible results for users. When you align your content strategy with that goal, most duplicate content issues solve themselves.

Instead of asking “How can I avoid duplicate content?” start asking:

- “What unique value does this page provide?”

- “How does this content help my users better than existing alternatives?”

- “What insights or perspectives can I share that others haven’t?”

When you create content with this mindset, you naturally avoid duplication because you’re focused on providing something genuinely new and valuable.

Next Steps: What You Should Do Today

Don’t let this guide sit in your bookmarks. Here’s your action plan:

Today (30 minutes):

- Set up Google Search Console if you haven’t already

- Run a quick site: search for your domain to see what Google has indexed

- Check your top 5 most important pages for basic duplicate content issues

This Week (2-3 hours):

- Use Siteliner to scan your site for internal duplicate content

- Set up Copyscape alerts for your most important content

- Create a simple spreadsheet to track duplicate content issues you find

This Month (ongoing):

- Implement fixes for your highest-priority duplicate content issues

- Set up proper canonical tags across your site

- Create a content creation process that prevents future duplication

- Schedule regular duplicate content audits (quarterly is usually sufficient)

Remember, duplicate content isn’t a crisis – it’s an opportunity. Every piece of duplicate content you fix is a chance to consolidate your authority, improve user experience, and boost your search rankings. The websites that treat duplicate content as an ongoing optimization opportunity, rather than a problem to solve once, are the ones that see the biggest long-term SEO success.